I spend a lot of time on Instagram. Not posting stories, but researching Gen Z online political subcultures. That’s how I first stumbled across a content strategy that I’ve dubbed the “slow red-pill.”

I once followed the work of a group of far-right teenagers who devoted much of their time to radicalizing people. The strategy was simple. They’d set up meme pages that, on the surface, appeared to be run of the mill, Republican MAGA type accounts. The bio might read: 📃 Free Speech, 🔵 Debate Welcome, 🔴 Make America Great – by all outward appearances it would look like a regular conservative Instagram page. This account would repost high performing content from big Republican pages (DC_Draino, the_typical_liberal, etc) and use the popularity of these images to accumulate a following of Fox, Breitbart and Turning Point USA type viewers.

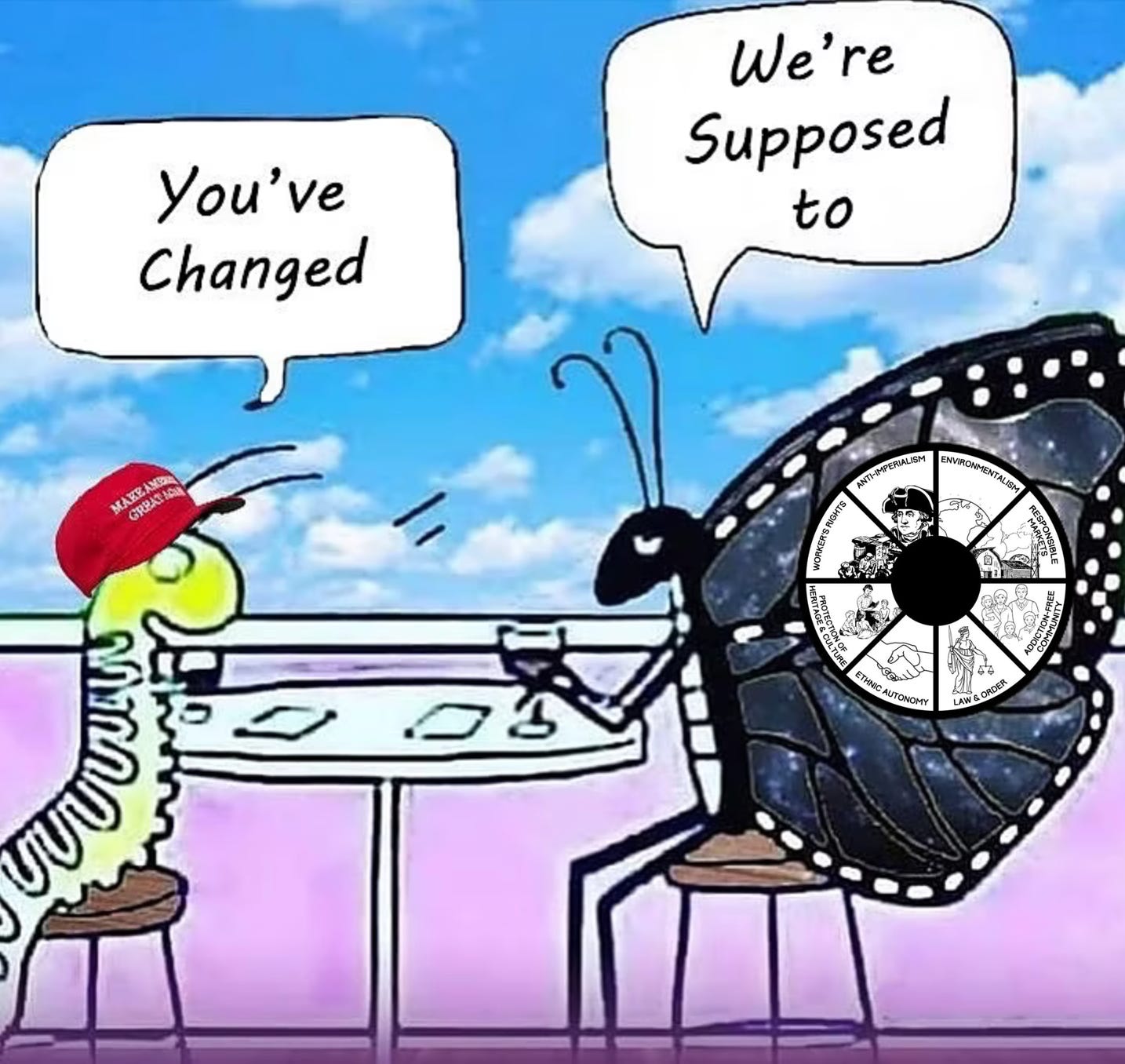

About once a week, the account would unexpectedly post extreme content. While regular posts would feature familiar conservative tropes like “having an iPhone means you can’t criticize capitalism” and “Venezuela proves that socialism doesn’t work”, extreme posts would contain racist caricatures and anti-capitalist messaging in favor of white identity.

This type of extreme racist post was frequently met with pushback from the community. Common responses included; “people should be treated as individuals not as part of a group’ and ‘the Democrats are the ones who want to divide us up by race’. Implicit or explicit gestures of anti-semitism were strongly protested by evangelical Christians. Red pill posts would rarely stay up long. In most cases, they were only intended to appear in one’s Instagram feed and to vanish shortly after. The account would then resume posting popular content, wait another week and try it again. This process would continue for months, maybe a year. By posting mainstream conservative content most of the time, these extreme right groups were able to build up an audience numbering in the range of 30,000 to 40,000, which they could then incrementally expose to radical content.

Towards the end of the account’s lifespan, the admins would dial up the ratio of radical content dramatically. Posts would frame shifting demographics as a “Great Replacement” orchestrated by nefarious transnational elites or describe how climate change would soon force harsh decisions about the distribution of scarce resources in the global north. Ultimately, they would put forward that, against the scale of the coming crisis, civil unrest and violence were not only permissible, but necessary. At this peak, the pages would be banned rather quickly (a large follower count vastly increases the likelihood of reports). But in the time of building up that following, they would have successfully moved a large portion of their audience significantly further to the right. This was always the true objective. Getting banned was an acceptable and anticipated casualty. The following week, they would make a new account and start over.

It’s not just the American far-right that’s using this strategy. Similar tactics were used in 2018 by members of the Myanmar military, creating fan pages for local Burmese pop stars and celebs, like beauty queen Shwe Eain Si, accumulating over a million combined followers that abruptly swapped into propaganda accounts to spread anti-Rohingya messaging.

The common reaction among many of today’s liberals and centrists to this growing proliferation of far-right material online is to call for greater action from Silicon Valley giants. But attempts to combat this type of content are always necessarily on the back foot. In recent years, debates over moderation and algorithmic recommendation have worked to obscure the true source of radicalization; an atomized and precarious society in which increasing numbers of people are unable to access the benefits of the mainstream and, as a result, now move to the political fringes. There is no content moderation solution for a political problem.

Returning from a recent account ban, one of the main admins wrote; “I’m happy to be back guys, remember as long as I remain on this earth I will never stop shitposting for you lads. I’m on my 18th account.”

Perhaps it is time to accept that this kind of political mobilization is here to stay. In the past, young people were politicized through radical movements and underground subcultural spaces. But today, platforms have caused all countercultural scenes to sublimate and recollect online. Meme pages, influencers and online groups aren’t going anywhere. If we don’t like their views, the answer is not to cozy up with big tech in an endless game of whack-a-mole moderation, but to meet their message with our own.

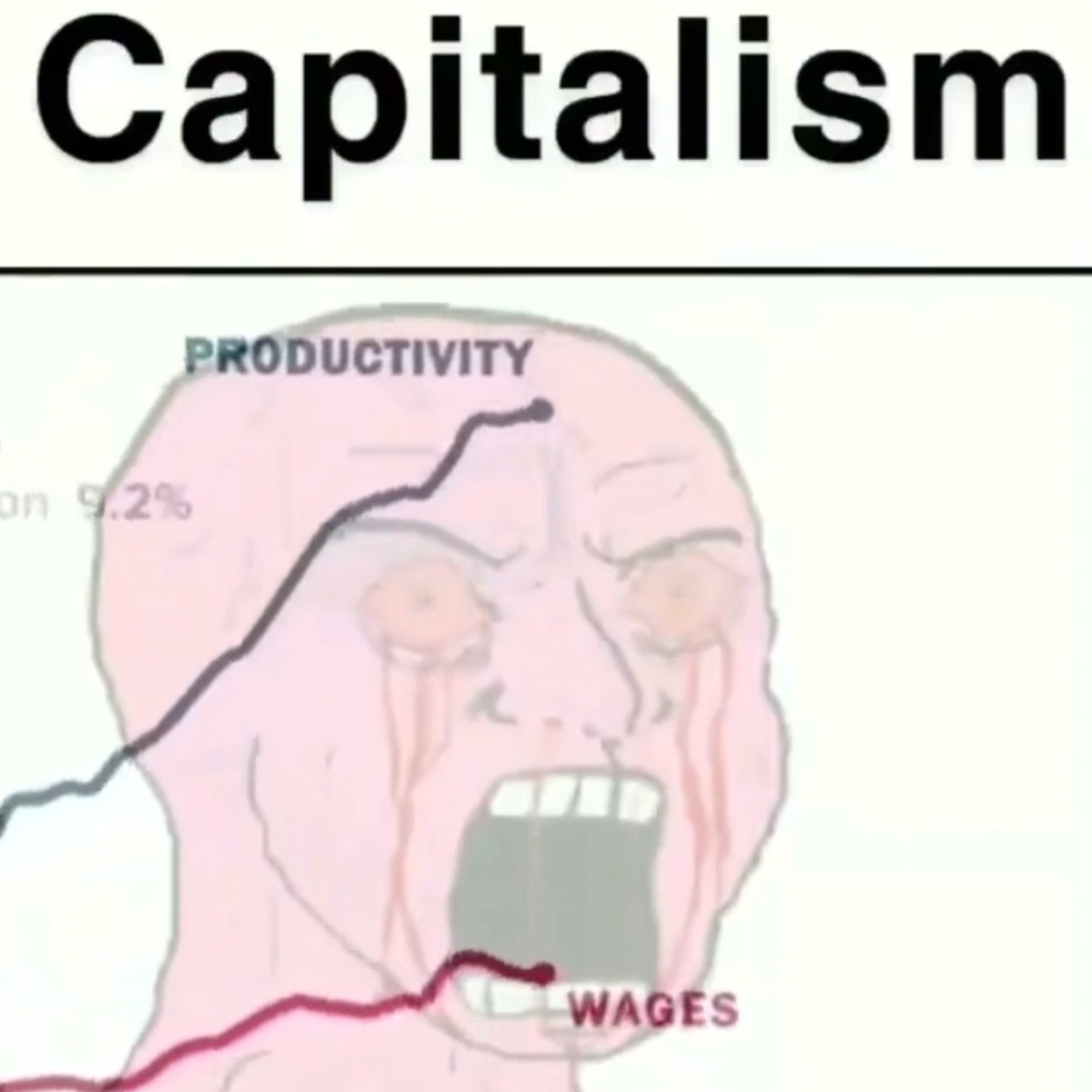

There is nothing implicitly right wing about the slow red-pill strategy. Swapping normie meme accounts into political content is an effective tactic. Anyone can use it. But instead of extreme right content, one might choose to show declining rates of union membership, the divergent trendlines of productivity and wages, or the unprecedented upward drift of wealth in the past 40 years. As the center is vacated by disillusioned masses, whether they look to the left or the right for alternatives is largely up to us.

> There is nothing implicitly right wing about the slow red-pill strategy.

Methods of communication, especially technical/network strategies, are never neutral. Whether or not this approach is implicitly "right wing" it embodies a model of deceitful manipulation that cannot be the foundation of a productive, positive politics. I think it's more important to have a strong aversion to this kind of communication strategy than it is to have, or propagate, the "correct" opinion about any of the specific policies that form the content of the messages transmitted through it.

I spend a lot of time on Instagram. Not posting stories, but researching Gen Z online political subcultures. That’s how I first stumbled across a content strategy that I’ve dubbed the “slowly inserted blue dildo.” Blah.

Is your research done through a window or a mirror?